The vqgan clip model

Introduction

Very often, AI promises don’t deliver. But sometimes they deliver much beyond your expectations. CLIP VQGAN is one of them.

What can you create ?

Select a prompt and the model will give you an image corresponding to the inputed text.

Some examples

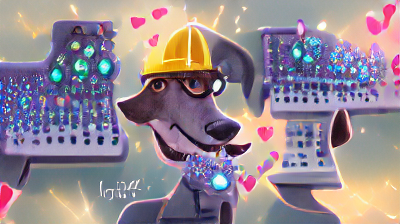

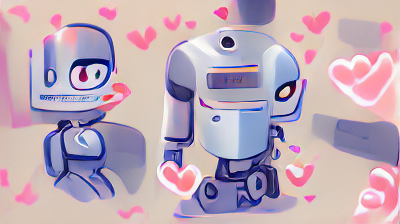

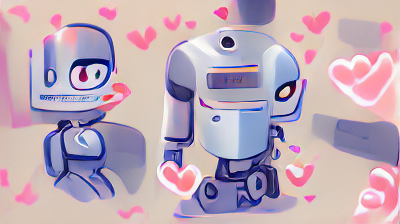

Input : I’m in love with a robot

Input : I’m in love with a robot

Input : Back to the pit

Input : Back to the pit

Input : Chicken surf

Input : Chicken surf

Input : Toaster forest

Input : Toaster forest

Input : The rave

Input : The rave

Input : Colorful mushrooms

Input : Colorful mushrooms

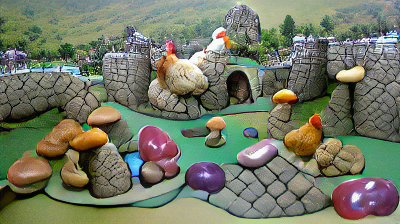

Input : Chicken boulder land

Input : Chicken boulder land

Where can you do it yourself ?

There is an easy access to a colab notebook.

I had a lot of fun with it.

What are the details of the algorithm ?

CLIP

CLIP has been developped by OpenAI as a way to bridge the gap between image and textual representation at a large scale. This allow them to learn a very performant zero-shot visual model (they don’t need to label any image manually).

As it comes from OpenAI there is already a lot of litterature covering the subject, like their blog post

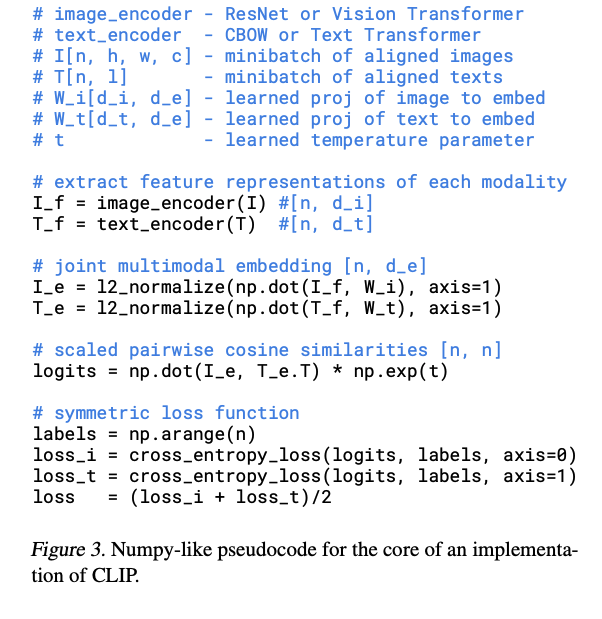

What I was curious about was the implementation used in order to get the powerful embedding they are getting. The following screenshot from their paper describes how easy the general idea is.

They use :

- A very large dataset of image and caption couples

- A slightly modified resnet for image features and a transformer for text embedding

- An embedding transformation is learned for each source of information

- A softmax among the batch forces the network to learn what is the most appropriate sentence for each image

VQGAN

This is a mix between a transformer and a GAN architecture.

Some useful links :

In short :

- The work of the generator is made simpler with a codebook of quantized features

- A transformer learns to associate which codes go well together

- Codes from the generated image are constructed in a sliding window manner

- The generator only has to learn to reconstruct the relationship between codes and real images.

Generation

Forward :

The generated image goes through the image path of the CLIP model and the embedding is compared to the text embeding 1 2. We maximise the similarity between the two. To have a better looking result, a batch of crop and different data augmented version of the image are passed in a batch.

This article discusses some common methods to have a more stable optimization process.

Backward :

So what do we optimize exactly ?

In fact, the optimized image is parametrized by the VQGAN internal representation 1. We start with random noise and pass it through the Vqgan model to obtain a starting z 2. The total backward loss is computed through all VQGAN network and CLIP 3.

Appendix

Nightmare material

What everyone didn’t need !

Faery tale characters

What does a paper company cat ceo looks like ?